Syncast Tech Report Vol.1

syncast tech report vol 1Offline Image Recognition × Edge AI × Ditto

Have you given up on DX due to "Security Barriers" or "Lack of Connectivity"?

K&K Solution is conducting technical verifications for "contactless, retrofit" digitization of equipment that cannot be connected to the network, using cameras and Edge AI. We reveal methods and possibilities for collecting numerical data from monitors and analog meters using only local device-to-device communication, without connecting to the internet.

Verification Summary

- ● Result: Successfully digitized and synced legacy monitor data without any internet connection.

- ● Tech Achievement: Realized secure "Retrofit IoT" by combining Edge AI data extraction with Ditto offline sync.

- ● Applicability: Confirmed practicality for air-gapped environments like aircraft, tunnels, and critical infrastructure.

Background: The "3 Barriers" We Aimed to Solve

In many fields, valuable data displayed on screens is wasted. We hypothesized that the following "3 Barriers" are the key factors stalling DX, and conducted this verification to overcome them without modifying existing equipment at all.

Control panels and critical infrastructure often operate under strict "Air-gapped" policies (no external network connection allowed).

Modifying legacy systems incurs massive vendor fees and risks associated with system downtime and re-validation.

Conventional IoT devices cannot be used in areas like mountains, underground tunnels, or aircraft cabins where Wi-Fi/Cellular signals are unavailable.

Verification 1: Offline OCR & Data Sync

"From Analog Display to Digital Data"

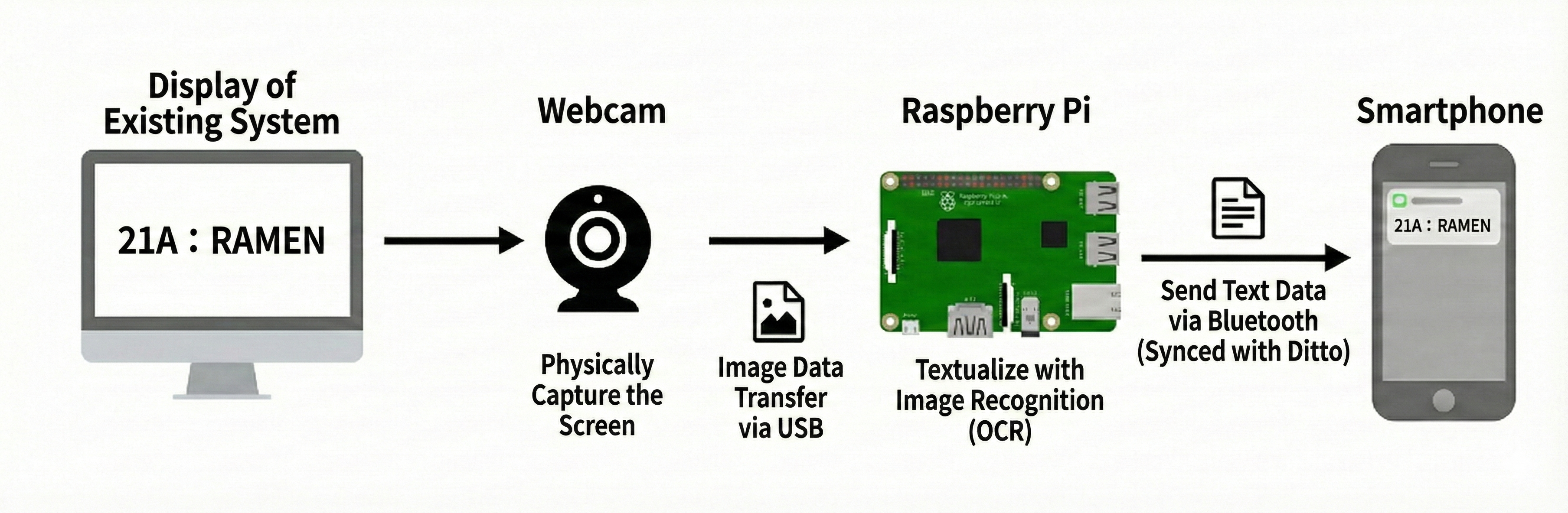

We built a system where a webcam captures the screen, Edge AI extracts the values, and Ditto technology broadcasts notifications.

System Configuration & Process Flow

Hardware: Raspberry Pi 5 and a commercial USB Webcam.

Software: Integration of Python (Tesseract) and Node.js (Ditto).

This configuration enables secure deployment with "Zero Interference" to existing cables/programs

and realizes operation without communication costs.

Verification 2: Edge Vision-Language Model (VLM)

In addition to reading "numbers" via OCR, we verified the operation of a Vision-Language Model (VLM) running on a single Raspberry Pi unit.

This is an attempt to perform advanced recognition processing ("understanding image meaning") solely on the edge device without using cloud LLMs.

Experiment: Image Understanding

Running a local VLM on Raspberry Pi 4 to execute inference with the following prompt.

Results & Outlook for Surveillance Solutions

- Advanced Recognition: Successfully verbalized not only text information but also object recognition (person, car, dog) and their relationships (sitting next to, etc.).

- Processing Time Barrier: Currently, it takes about 4 minutes to complete inference (including image encoding). This is too long for real-time monitoring.

- Future Measures: We aim to optimize for practical speed through model quantization (lightweighting) and combination with motion detection triggers (running VLM only when movement occurs).

- Potential Applications: Applying this technology is expected to realize autonomous surveillance solutions in communication-dead zones, such as detecting entry into dangerous factory areas or fixed-point observation of environmental changes (river rising, animal appearance).

* Note: The text data generated in this verification can also be synced offline using the same mechanism (Ditto) as in Verification 1.

Future Applications & Use Cases

Solving offline field challenges with two approaches: OCR and VLM.

Edge AI Robot

Installed on security/patrol robots. Even in communication dead zones, VLM autonomously judges and records "suspicious objects" or "anomalies."

BCP / Disaster Recovery

In the event of a system downtime, cameras read emergency FAX orders, digitize them offline, and sync to the field.

Join us in developing this technology.

For inquiries regarding the Syncast series and Ditto, consultation on creating applications that enable device-to-device communication,

or if you are interested in joining us as a Business Member or Development Member to promote this project,

please contact us via the form below or the email address.